Kafka Explained

A super-efficient postal system for data streaming.

Apache Kafka is like a super-efficient postal system for data. Imagine you have a lot of messages (data) that need to be sent from one place to another quickly and reliably. Kafka helps with this by organizing, storing, and delivering these messages where they need to go.

Key Concepts

1. Topics

Topics are like mailboxes. Each topic is a category or a specific type of message. For example, you might have one topic for orders, another for user activity, and another for error logs.

2. Producers

Producers are like people who send mail. They create messages and put them into the right topics (mailboxes). For instance, an online store's order processing system might produce messages about new orders and send them to the "orders" topic.

3. Consumers

Consumers are like people who receive mail. They read messages from the topics they're interested in. For example, a shipping service might read new orders from the "orders" topic to know what to ship.

4. Brokers

Brokers are the post offices. They handle the storage and delivery of messages. Kafka brokers make sure that messages get from producers to consumers efficiently and reliably.

How It Works

Sending Messages: When a new piece of data (message) is generated, a producer sends it to a specific topic.

Storing Messages: Kafka stores these messages in a durable, fault-tolerant way, ensuring they won't be lost.

Reading Messages: Consumers read messages from the topics they are interested in. They can read messages in real-time as they arrive or later, depending on their needs.

5 Real Use Cases For Apache Kafka

1 - Publish-subscribe

In a publish-subscribe model, Kafka acts as a message broker between publishers and subscribers. Publishers send messages to specific topics, and subscribers receive these messages. This model is particularly useful for distributing information to multiple recipients in real-time.

Example: A news publisher sends updates on different topics like sports, finance, and technology. Subscribers interested in these topics receive the updates immediately.

2 - Log aggregation

Kafka efficiently collects and aggregates logs from multiple sources. Applications generate logs, which are then sent to Kafka topics. These logs can be processed, stored, and analyzed for insights.

Example: A tech company collects logs from various applications to monitor performance and detect issues in real-time.

3- Log shipping

Kafka simplifies the process of log shipping by replicating logs across different locations. Primary logs are recorded, shipped to Kafka topics, and then replicated to other locations to ensure data availability and disaster recovery.

Example: A financial institution replicates transaction logs to multiple data centers for backup and recovery purposes.

4 - Staged Event-Driven Architecture (SEDA) Pipelines

Kafka supports SEDA pipelines, where events are processed in stages. Each stage can independently process events before passing them to the next stage. This modular approach enhances scalability and fault tolerance.

Example: An e-commerce platform processes user actions (like page views and purchases) in stages to analyze behavior and personalize recommendations.

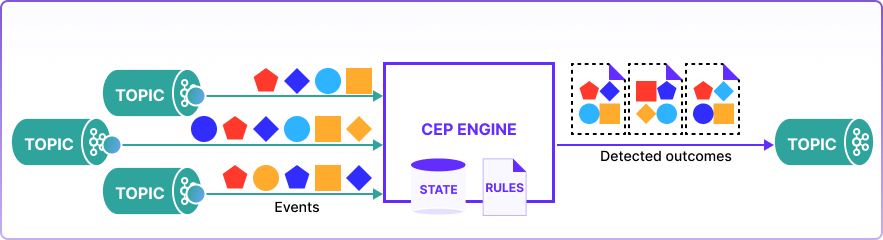

5 - Complex Event Processing (CEP)

Kafka is used for complex event processing, allowing real-time analysis of event streams. CEP engines process events, detect patterns, and trigger actions based on predefined rules.

Example: A stock trading system uses CEP to monitor market data, detect trends, and execute trades automatically based on specific criteria.

Understanding these 5 applications, businesses can better appreciate Kafka's role in modern data architecture and explore ways to integrate it into their operations for enhanced data management and processing.

Poll Time 🚨

Companies Using Kafka

Several industry leaders utilize Kafka to handle their real-time data needs:

LinkedIn

Netflix

Uber

Grab

Lyft

X (formerly Twitter)

These companies leverage Kafka's capabilities to manage vast amounts of data, ensuring real-time processing, reliability, and scalability.

By thinking of Kafka as a sophisticated postal system for data, it becomes easier to understand how it helps manage and process large amounts of information quickly and reliably.

Examples and Doc Reference missing